blazing-fast data science on GPUs

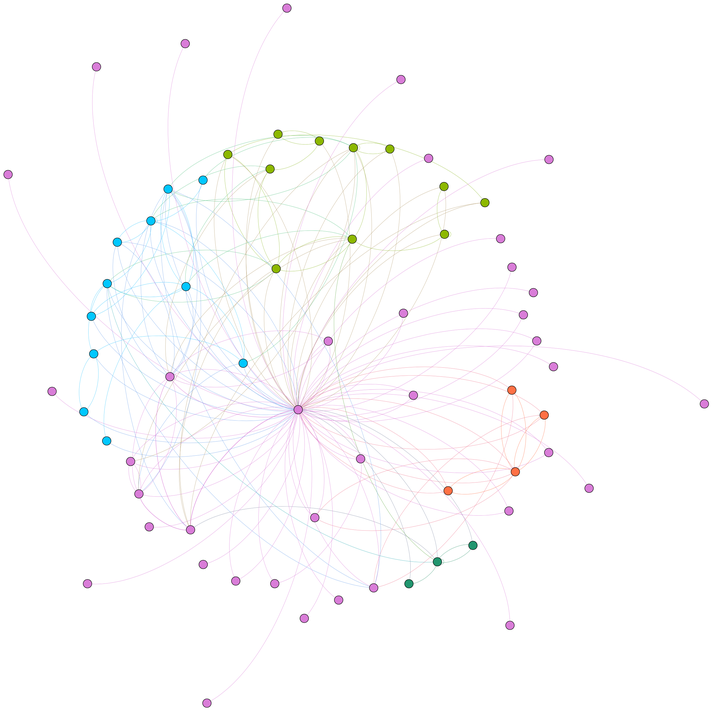

graph analytics with cuGraph – ego network

by Georg Heiler

In an ever more connected world the size of datasets for various use cases is increasing more and more. For traditional graph tools using the CPU this fact can make your analyses painfully slow. Calculations can easily run for hours - if not days. This makes the analytical worflow anything but interactive.

Accellerators like GPUs can help to speed things up - a lot! Even complex graph algorithms can now execute at interactive speed in seconds and development is a breeze again. In fact I myself experienced this for a graph of about 100 million edges. The traditional tools would not be able to handle load it well - whereas cuGraph operates on it in a matter of seconds for various graph algorithms.

In the past, learning to write low level CUDA as well as being able to write code which deals well with the intricacies of GPUs regarding memory limitations and parallelism was very complex. NVIDIA developed RAPIDS AI to ease this pain by offering python bindings. The API of these adheres mostly to well known ones of popular python packages like pandas or networkx.

However, cuGraph is still rather early. Various well established graph algorithms are not ported to this framework yet. This is also the case for calculating an ego network. In the following lines I will demonstrate how to add this functionality.

ego network for cuGraph

using the management tool

!nvidia-smi

you can check the gpus available for you and their utilization. If you have an issue like a resource allocation error or an out of memory error, consider to restart the jupyter notebook or manually kill the offending process like outlined on Stackoverflow

nvidia-smi | grep 'python' | awk '{ print $3 }' | xargs -n1 kill -9

# show the user

ps -u -p <<pid>>

In case you have multiple gpus available, it is good practice to start out with a specific GPU:

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "1"

This especially can help in a multi-user scenario to share resources fairly.

Import the various packages

import pandas as pd

import cudf

import cugraph

Load the data and create a graph. This particular karate data set is made available directly by the developers of cuGraph on gitHub. Download the CSV and read with pandas:

karate = pd.read_csv('karate.csv', header=None, sep=' ')

karate.columns = ['src', 'dst', 'weights']

Now move the CPU data to the gpu.

Indeed, cuDf offers bult in tooling to load the data directly. Obviously you can speed up the analyses even more if you use them.

However there are two points why I choose not to use them and rely on traditional pandas to get the job done:

- memory: I use spark to pre-aggregate the data (on my real dataset). Even after aggregation with about 100 million edges it is still fairly large. I need to add a preprocessing step to convert identifiers to cuGraphs src/dst ids. Usually cugraph offers a function for renumbering. But it fails: as I cannot load all the data into the gpu in the first place and secondly it even runs out of memory even when reducing the data a bit. This is why I choose to use pandas for preprocessing to trim the data down even further until it fits into the GPU as I only have a P100 with 16GB of RAM.

- parquet cuDf can read a single parquet file. But any big-data tool will usually not write a single file, but instead a whole folder with many randomly named parquet files. In my opinon it is simply easier to rely on the battle-tested functions offered by pandas which happily read the whole directory.

karate = cudf.from_pandas(karate)

Let’s create the cuGraph graph object

G_karate = cugraph.DiGraph()

G_karate.from_cudf_edgelist(karate, source='src', destination='dst', edge_attr='weights', renumber=False)

Now finally, lets derive the ego network. This functionality is currently not a built in part of cuGraph, but it offers the necessary building blocks to create such a function quickly.

CuGraph offers various graph traversal functions like breadth first search. Starting from a given vertex it will traverse the whole graph and derive the distance.

The candidate list is filtered to only contain vertices of the desired maximum distance from the ego node.

In a second step, the edge list is filtered to only contain entries where the src or dst column is contained in the list of candidates.

cugraph.subgraph(G_carate, ego_candidates)). However, for me this always deleted the main ego node (on my real data set).

As for an ego graph this is the main vertex I want to retain I had to hand-roll the extraction of the desired entries from the edge list.ego_id = 1

def make_ego_csv(level):

cudf_res = cugraph.bfs(G_karate, ego_id)

ego_candidates = cudf_res[cudf_res.distance <= level].vertex

print(ego_candidates.shape)

sub_edges_g = karate[(karate.src.isin(ego_candidates) | karate.dst.isin(ego_candidates))]

print(sub_edges_g.shape)

# rename to gephi supported names

sub_edges_g.columns = ['Source', 'Target', 'Weight']

if level > 1:

# to save memory in gephi, reduce the details if even the sub-graph gets large

sub_edges_g.drop(['Weight']).to_csv(f"ego_{level}_graph.csv", index=False)

else:

sub_edges_g.to_csv(f"ego_{level}_graph.csv", index=False)

print('**********')

sub_edges_g = make_ego_csv(0)

make_ego_csv(1)

make_ego_csv(2)

(1,)

(18, 3)

**********

(10,)

(80, 3)

**********

(23,)

(148, 3)

**********

And finally, for validation: indeed the ego node is still in the data.

sub_edges_g[(sub_edges_g.Source == ego_id) | (sub_edges_g.Destination == ego_id)]

| Source | Destination | |

|---|---|---|

| 0 | 0 | 1 |

| 16 | 1 | 0 |

| 17 | 1 | 2 |

| 18 | 1 | 3 |

| 19 | 1 | 7 |

| 20 | 1 | 13 |

| 21 | 1 | 14 |

| 22 | 1 | 15 |

| 23 | 1 | 16 |

| 24 | 1 | 19 |

| 26 | 2 | 1 |

| 36 | 3 | 1 |

| 51 | 7 | 1 |

| 68 | 13 | 1 |

| 73 | 14 | 1 |

| 75 | 15 | 1 |

| 78 | 16 | 1 |

| 84 | 19 | 1 |

summary

cuGraph can run various graph analyses in a matter of seconds. But it is still a little bit immature and some functions like calculating an ego network are missing. You will run into edge cases especially when the datasets grow larger - as mentioned above in the notice box: some preprocessing might become necessary.

But overall adding the function for the ego network is simple!

This was a repost. The original post is found here: https://georgheiler.com/2020/04/03/blazing-fast-data-science-on-gpus/